Learning the essence of data science and its underlying processes in the age of big data is essential for businesses aiming to harness the power of data-driven insights.

Let’s delve deeper and explore the data science process’s definition, methods, and complexity.

From data collection and preprocessing to modelling and interpretation, unpacking the data science process reveals a systematic approach to extracting actionable intelligence from raw data, thus creating significant industry decision flexibility.

Data technology is experiencing an exponential boom as companies increasingly depend on statistics-driven insights to exploit an aggressive side.

Pursuing a records technological know-how path equips individuals with the necessary abilities to navigate this burgeoning field. A well-designed data science course provides a comprehensive foundation, from mastering programming languages like Python and R to information statistical techniques and system studying algorithms.

Moreover, hands-on experience with actual-world datasets and realistic tasks enhances proficiency.

With demand for information scientists surging throughout industries, embarking on a facts technology route opens doors to lucrative professional opportunities and guarantees relevance and competitiveness in the dynamic task marketplace.

What is Data Science?

Data technological know-how is a multidisciplinary area commonly using scientific strategies, algorithms, and structures to extract insights and expertise from massive dependent and unstructured statistics.

It encompasses diverse techniques: facts mining, machine getting to know, statistical analysis, and visualization, to find patterns, traits, and correlations within datasets.

Data scientists leverage programming languages like Python, R, and SQL and equipment and frameworks like TensorFlow and Apache Spark to control, examine, and interpret records.

Data technological know-how ambitions to derive actionable insights and make knowledgeable selections that drive commercial enterprise growth, optimize processes, and beautify decision-making across various domain names, including finance, healthcare, advertising and marketing, and generation.

With the proliferation of huge information and technological advancements, records technology has emerged as an essential enabler of innovation.

It enables corporations to release the price hidden within their records and gain a competitive side in a cutting-edge, statistics-driven world.

What is the data science process?

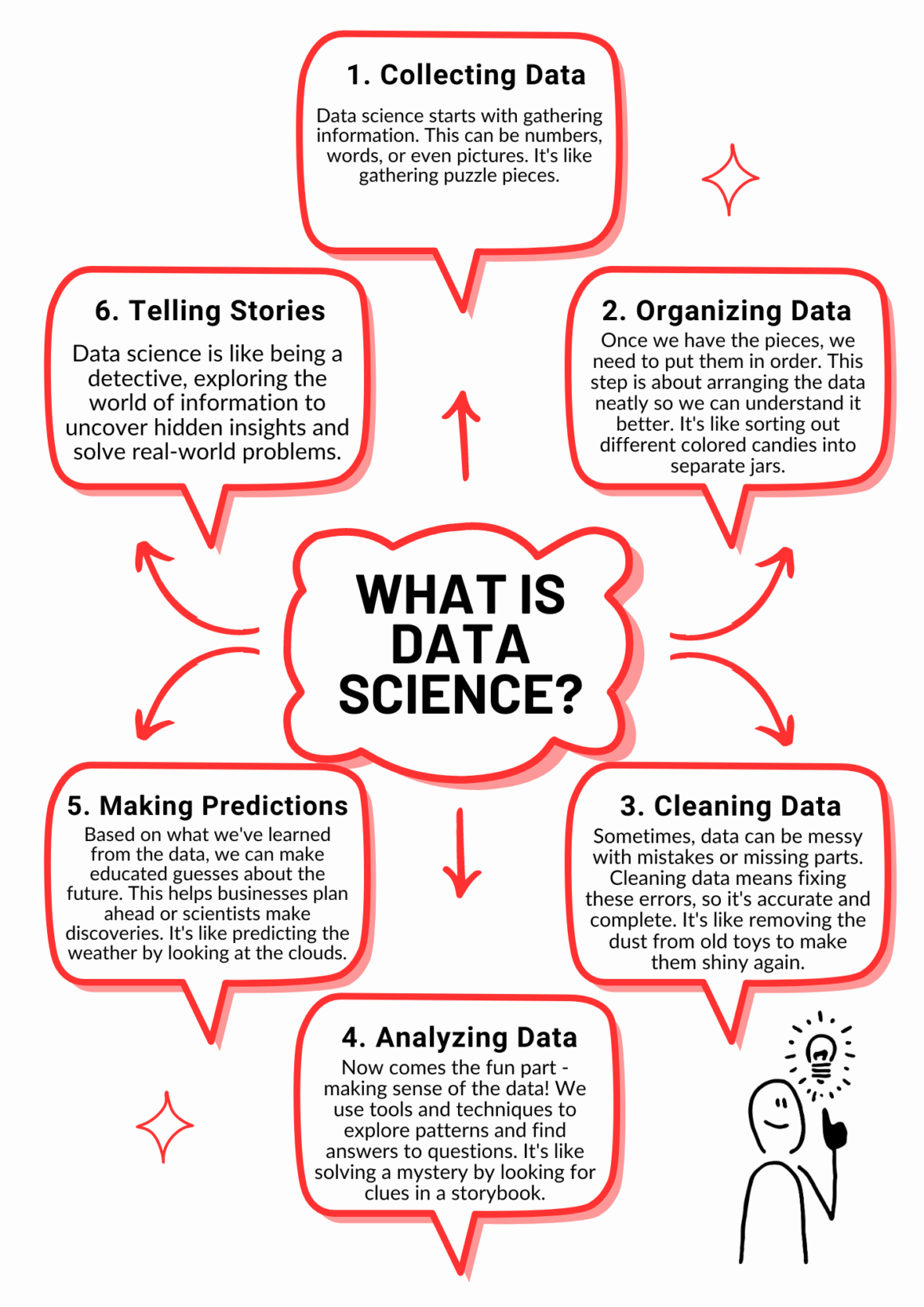

The records technology procedure is the systematic technique of extracting insights from information through structured steps.

It encompasses records collection, guidance, analysis, and interpretation, culminating in actionable insights that drive choice-making.

By following this procedure, statistics scientists can correctly navigate the complexities of significant facts and derive meaningful conclusions to remedy actual international problems.

Data Science Process

Data technological know-how is typically conceptualized as a 5-step technique, frequently known as a lifecycle:

Capture: In the seize phase of records science, the number one objective is to gather facts from diverse resources, together with databases, APIs, net scraping, sensors, and more.

This system includes identifying relevant records resources and determining the best techniques for information collection.

Data can be dependent, semi-dependent, or unstructured, requiring tailor-made approaches for acquisition. Techniques including statistics logging, records extraction, and fact ingestion pipelines are generally employed to seize records while ensuring their exceptional integrity.

Effective information capture lays the inspiration for subsequent analysis and choice-making, making it an essential preliminary step within the facts technological know-how lifecycle.

Maintain: Data maintenance is essential for preserving the quality, integrity, and accessibility of statistics at some stage in its lifecycle. This system encompasses numerous duties to ensure that records stay correct, steady, and up to date.

Data cleansing entails figuring out and rectifying errors, inconsistencies, and missing values in the dataset. Data transformation may involve restructuring or aggregating records to facilitate evaluation.

Additionally, record normalization ensures that records are saved in a standardized layout, improving their usability across distinctive applications. Continuous tracking and management of facts are essential to address evolving necessities and keep data exceptional.

Process: The method segment includes making ready and preprocessing statistics to make it suitable for evaluation. This consists of obligations, which include statistics transformation, characteristic engineering, and statistics integration.

Data may undergo normalization, scaling, or encoding to standardize and decorate its usability for evaluation. Feature engineering involves selecting, developing, or enhancing capabilities to improve the performance of machines, and getting to know algorithms.

Data integration entails combining statistics from a couple of resources to create a unified dataset for analysis. The system segment aims to ensure that records are based, applicable, and conducive to deriving significant insights through evaluation.

Analyze: Analysis is the core of records technological know-how, where information is explored, interpreted, and analyzed to extract insights and patterns.

This phase involves applying statistical techniques, gaining knowledge of algorithms, and using records mining methods to find relationships and tendencies within the dataset.

Exploratory records evaluation (EDA) strategies, including information visualization and descriptive information, help know the underlying styles and distributions in the records.

Predictive modelling strategies, which include regression or classification, are used to make predictions or classify statistics based on historical styles. The analysis segment seeks to derive actionable insights that inform decision-making and power commercial enterprise fees.

Communicate: Communication is crucial for efficiently conveying insights and findings derived from records evaluation. This segment involves genuinely and understandably presenting outcomes, visualizations, and pointers to stakeholders.

Data visualization techniques and charts, graphs, and dashboards are used to speak complicated statistics visually. Narratives, summaries, and reviews assist in supplying context and interpretation of the analysis outcomes.

Effective conversation ensures that decision-makers understand and act upon insights, mainly through knowledgeable selections and moves based on records-driven proof. Additionally, comments and stakeholder collaboration facilitate non-stop improvement and refinement of analysis approaches.

Conclusion

Pursuing a data science course allows individuals to develop the knowledge, skills, and equipment to navigate each stage of this process successfully. Through hands-on learning and practical experience, participants are introduced to data management, analytical and communication techniques.

Ultimately, data science education empowers individuals to harness the power of data and deliver meaningful results across industries and industries.